Dr Oya Celiktutan

Reader in AI and Robotics

Research interests

- Engineering

Biography

Dr Oya Celiktutan is a Reader at the Centre for Robotics Research in the Department of Engineering and leads the Social AI & Robotics Laboratory. She received a BSc degree in Electronics Engineering from Uludag University, and an MSc and PhD degree in Electrical and Electronics Engineering from Bogazici University, Turkey. During her doctoral studies, she was a visiting researcher at the National Institute of Applied Sciences of Lyon, France. After completing her PhD, she moved to the United Kingdom and worked on several projects as a postdoctoral researcher at Queen Mary University London, the University of Cambridge, and Imperial College London, respectively. In 2018, she joined King’s College London.

Dr Celiktutan’s research focuses on multimodal machine learning to develop autonomous agents, such as robots and virtual agents, capable of seamlessly interacting with humans. This encompasses tackling challenges in multimodal perception, understanding and forecasting human behaviour, as well as advancing the navigation, manipulation, and social awareness skills of these agents. Her work has been supported by EPSRC, The Royal Society, and the EU Horizon, as well as through industrial collaborations. She received the EPSRC New Investigator Award in 2020. Her team’s research has been recognised with several awards, including the Best Paper Award at IEEE Ro-Man 2022, NVIDIA CCS Best Student Paper Award Runner Up at IEEE FG 2021, First Place Award and Honourable Mention Award at ICCV UDIVA Challenge 2021. Dr Oya Celiktutan was promoted to a Reader in AI and Robotics in August 2024.

Research Interests

- Multimodal Machine Learning

- Human-Robot Interaction

- Human Behaviour Analysis and Synthesis

- Generative Models

- Continual Learning

- Reinforcement Learning

More Information

Research

Design & Mechatronics

Fusing mechanical, electrical and control engineering.

Data-Centric Engineering

Applying machine learning to engineering challenges

Centre for Robotics Research

The group develops solutions to critical challenges faced in society where robot-centric approaches can improve outcomes.

Centre for Technology and the Body

Stories of embodied technology: from the plough to the touchscreen

News

King's Culture announces Creative Practice Catalyst Seed Fund recipients

King's Culture announce the recipients of a new creative practice seed fund, enabling researchers and academics to consolidate relationships with partners in...

Launch event marks the opening of Glowbot Garden

Giant soft robots takeover Strand Aldwych

Developing A New Wave Of Soft Robots

The Department of Engineering has been working in collaboration with Air Giants.

Wonderful interactive creatures come to the Strand this January

Approach, touch and interact with giant soft robots in a free, outdoor experience like no other, inspired by a collaboration with King's robotics research

King's scientists explore the effects of AI on human life

Bringing the Human to the Artificial presents cutting-edge AI research at King’s.

NMES academic promotions

New readers and senior lecturers in NMES

Events

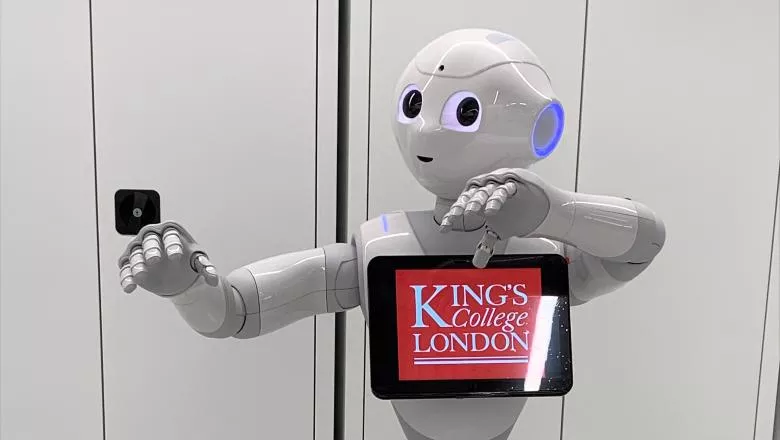

Meet Pepper, a friendly humanoid robot

Have a chat with Pepper and King's roboticists to learn about the technology behind Pepper and the aims of our research.

Please note: this event has passed.

Features

Meet: Dr Oya Çeliktutan

Dr Oya Çeliktutan builds the kind of robots we want to be around: robots that can read social cues, respond appropriately, and stay calm under pressure.

Spotlight

Robots and humans working together

The first trial of a Human Support Robot

Research

Design & Mechatronics

Fusing mechanical, electrical and control engineering.

Data-Centric Engineering

Applying machine learning to engineering challenges

Centre for Robotics Research

The group develops solutions to critical challenges faced in society where robot-centric approaches can improve outcomes.

Centre for Technology and the Body

Stories of embodied technology: from the plough to the touchscreen

News

King's Culture announces Creative Practice Catalyst Seed Fund recipients

King's Culture announce the recipients of a new creative practice seed fund, enabling researchers and academics to consolidate relationships with partners in...

Launch event marks the opening of Glowbot Garden

Giant soft robots takeover Strand Aldwych

Developing A New Wave Of Soft Robots

The Department of Engineering has been working in collaboration with Air Giants.

Wonderful interactive creatures come to the Strand this January

Approach, touch and interact with giant soft robots in a free, outdoor experience like no other, inspired by a collaboration with King's robotics research

King's scientists explore the effects of AI on human life

Bringing the Human to the Artificial presents cutting-edge AI research at King’s.

NMES academic promotions

New readers and senior lecturers in NMES

Events

Meet Pepper, a friendly humanoid robot

Have a chat with Pepper and King's roboticists to learn about the technology behind Pepper and the aims of our research.

Please note: this event has passed.

Features

Meet: Dr Oya Çeliktutan

Dr Oya Çeliktutan builds the kind of robots we want to be around: robots that can read social cues, respond appropriately, and stay calm under pressure.

Spotlight

Robots and humans working together

The first trial of a Human Support Robot