The event focused on the opportunities of AI for the Armed Forces sector, but also on some of the risks. It’s about getting a balance between the risks and opportunities and this event aimed to help us develop this consensus.

Dr Daniel Leightley, Lecturer in Digital Health Sciences at King’s.

18 October 2024

Exploring the role of AI in the Armed Forces

Earlier this week, academics came together with charity leaders, industry members and civil servants for an event at King’s College London that explored opportunities for the use of artificial intelligence (AI) in the Armed Forces sector.

Organised by the Department of Population Health Sciences at King’s in partnership with Combat Stress and funded by the Forces in Mind Trust, the event aimed to build a consensus within the Armed Forces charitable sector on the use of AI.

There is currently little guidance on the use of AI within the charitable sector. According to a survey conducted by the Department of Population Health Sciences ahead of the event, there is fear around its use within the Armed Forces charitable sector. This means there could be missed opportunities to use to AI to improve charity functions such as resource allocation, service delivery and engagement with clients.

During the event, the 40 attendees discussed how charities within the Armed Forces sector could make use of AI, what barriers exist for the use of AI, and what policies should be in place for its use within the sector.

Dr Leightley’s research is focused on military health, including on how technology can be used to improve the health and wellbeing of serving and ex-serving personnel.

“The attendees were a mix of academics and charity, industry and government members, which made for really lively discussions with broad perspectives,” he added.

The event was split into three sessions. After a welcome by Professor Dominic Murphy, Co-Director of King's Centre for Military Health Research and Head of Research at Combat Stress, the first session focused on what AI is and how it is currently being used in the sector.

Dr Leightley, spoke about ‘Demystifying AI: Separating Hype from Reality.’ His talk highlighted the hype around AI within the media, the real capabilities of AI, and its present limitations while calling for further improvements on guidelines, technology access and collaboration across the sector.

The session also included a talk from Zoe Amar FCIM, founder of Zoe Amar Digital, who discussed how AI is being adopted within charities. Her presentation highlighted the growing use of AI, but pointed out that while AI offers opportunities, many charities still face barriers, such as a lack of skills and concerns over data privacy, GDPR, and ethical considerations. Also, Dr Nick Cummins, Lecturer in AI for Speech Analysis at King’s, provided a practical example of how AI can be used to analyse speech as a digital biomarker. His work emphasised the potential of AI in clinical decision-making, while also acknowledging the challenges, including variability in speech analysis and the need for reliable datasets.

The second session explored the opportunities and risks associated with using AI. Dr Stuart Middleton, Associate Professor at the University of Southampton, presented on the capabilities of Large Language Models (LLMs), focusing on their potential applications in areas such as mental health and defence, while acknowledging the challenges posed by model biases and the need for responsible AI integration.

Samantha Ahern from the Centre of Advanced Research Computing at UCL, discussed the complexities of designing and implementing AI tools, addressing issues such as the risks of foundation models and the importance of explainable AI in healthcare. Finally, Dr Stella Harrison, Senior Analyst at RAND UK, explored AI regulation, highlighting challenges such as the ‘pacing problem’ and cross-border consensus, alongside the implications of the EU AI Act and UK regulatory frameworks.

Understanding the opportunities AI can offer and the risks that need to be managed is key for those working with and supporting the Armed Forces community. It was great to support an event starting the conversation between senior leaders to explore these issues and discuss how we can most effectively utilise and manage AI to support our community.

Michelle Alston, Chief Executive of Forces in Mind Trust

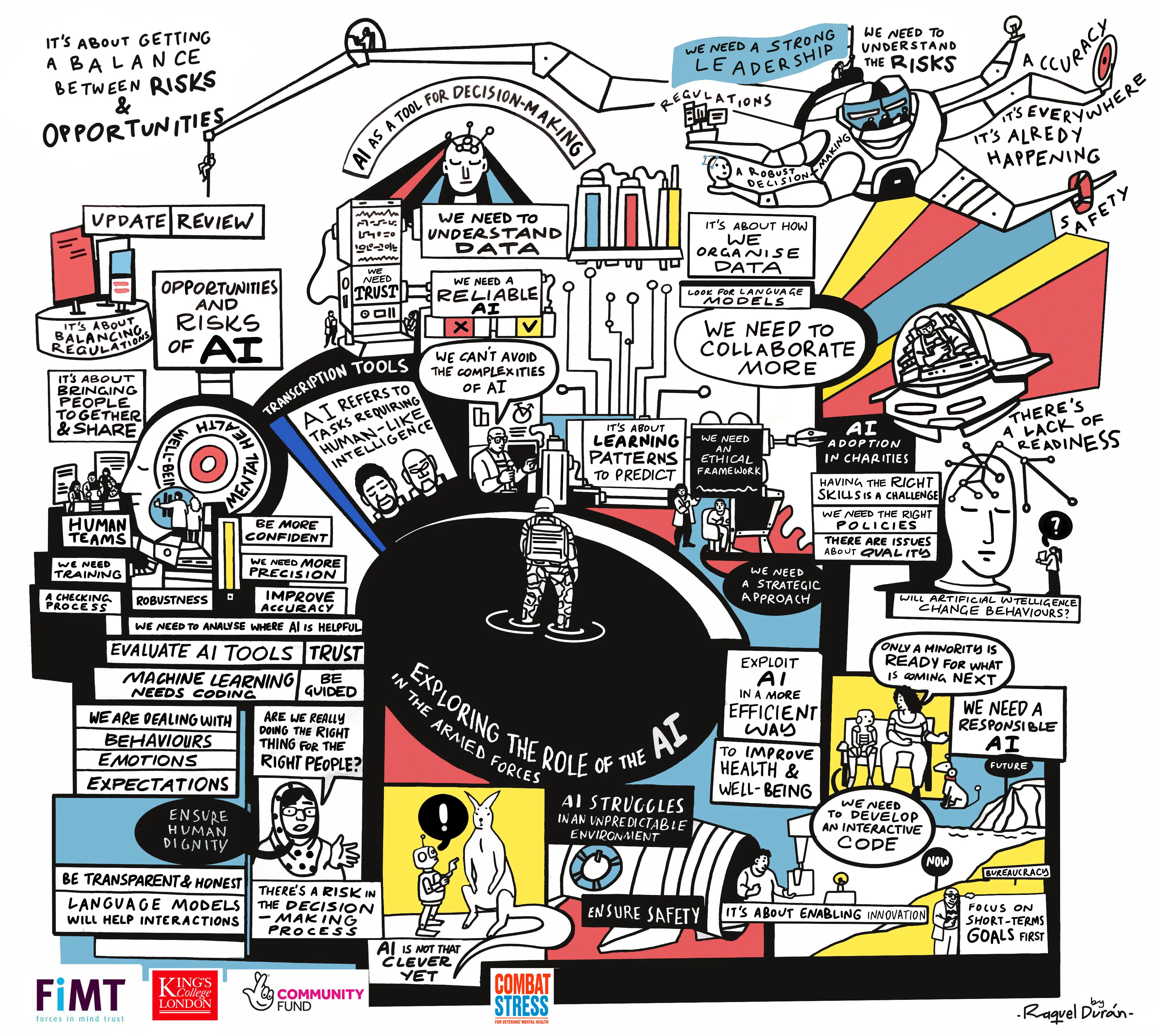

The final session of the day was a roundtable discussion, where attendees could delve deeper into the topics of the day. A live scribe was present at the event, who created an illustration of the conversations.

Some of the important points raised included: the need for an ethical framework and the right policies to put AI into practice within the sector; how can safety be ensured when using AI; the need for appropriate training so that people can make use of AI safely and confidently; and the need for more collaboration within the sector to understand the opportunities AI can have to improve services for clients.

The findings of the roundtable discussions will be aggregated to develop a consensus statement and shared with the community later in the year.